As we surge ahead into a future powered by artificial intelligence (AI), this technology’s darker side is becoming increasingly apparent. Despite AI’s celebrated capabilities of performing advanced tasks and making life easier, there’s growing unease about its potential for perpetuating racial bias.

This troubling phenomenon is cropping up across multiple sectors, including healthcare, law enforcement, and big tech. It’s a development that’s attracting widespread concern and criticism, from scholars to everyday users, turning what was once heralded as a force for progress into a possible tool for inequality.

A Flawed Mirror: AI’s Reflection of Human Bias

In the heart of this issue lies a fundamental flaw in the AI systems: they learn from the data we provide, reflecting and sometimes even amplifying our existing biases. As Reid Blackman, digital ethics advisor and author of “Ethical Machines,” succinctly put it, “AI is just software that learns by example. When you feed it examples riddled with biases or prejudiced attitudes, the output it generates will inevitably mirror those same biases.

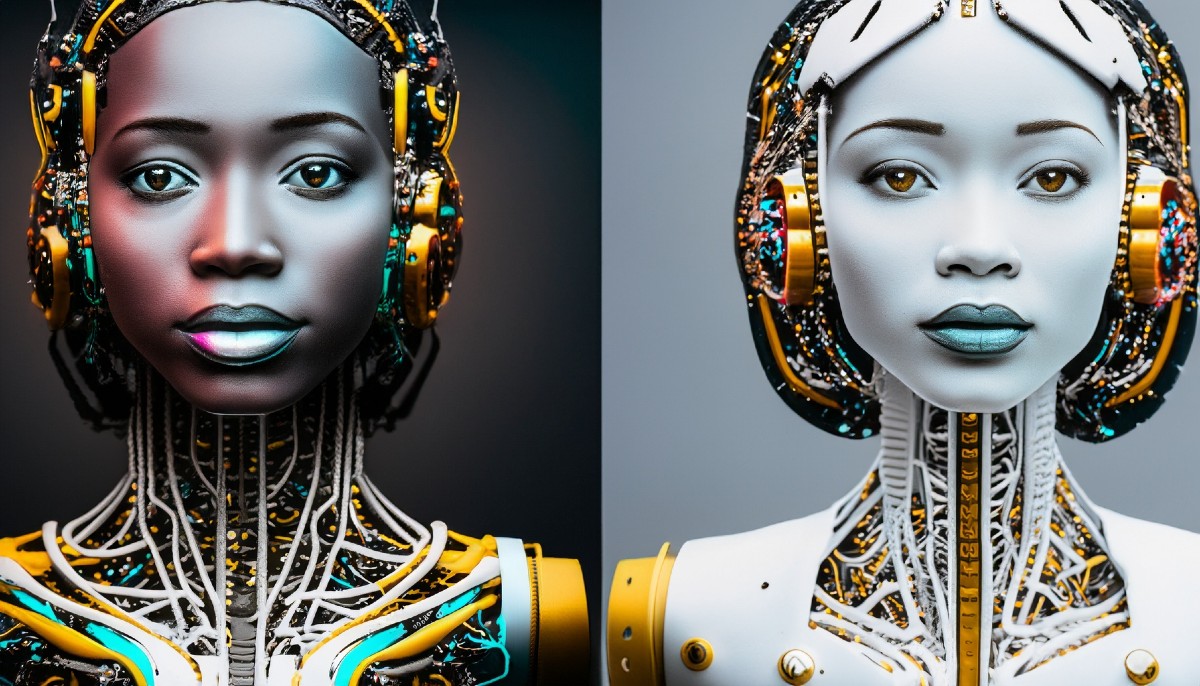

But what do these “outputs” look like in the real world? They look like Joy Buolamwini, a researcher at MIT, who found that some facial analysis software could not detect her face until she covered her dark skin with a white mask. Buolamwini’s research found that a major U.S. technology company’s facial recognition training set was more than 77% male and 83% white, despite the company claiming a 97% accuracy rate. Black women between the ages of 18 and 30 were found to have the poorest facial recognition accuracy, according to a report in the Journal of Biometrics and Biostatistics.

From Inaccuracies to Insults: The Real-World Consequences of Biased AI

Even when these biases don’t lead to outright errors, they can result in deeply troubling outcomes. A study conducted by institutions including Johns Hopkins University and the Georgia Institute of Technology programmed AI-trained robots to designate which blocks, representing people’s faces of different races, were criminals. The results? Blocks with Black faces were consistently labeled as criminals.

Sometimes, the damage is less direct but equally insidious. In 2015, Google Photos’ AI-based search tool made headlines for pulling images of Black people when the term “gorilla” was searched. Despite promises to fix the issue, the problem still persists, creating a painful reminder of AI’s potential for racial bias.

Even as these issues make headlines, 53% of Americans believe that racial and ethnic bias in the workplace would improve if employers used AI more in the hiring process, according to a Pew Research poll. The potential for AI to fight against racial bias remains, but it must be developed and deployed with care and transparency.

Dermatology: AI’s Bias Shows on the Skin

Nowhere is this clearer than in dermatology, where AI is increasingly used to diagnose and support decisions around skin conditions. However, the limitations of the datasets used to train these AI models are beginning to show. For example, a training dataset for dermatologists comprised 92% images of Covid skin lesions on lighter skin, a mere 8% displayed lesions on medium skin tones, and alarmingly, there were no images showcasing lesions on dark skin tones.

Melanoma, a skin cancer, is rare in African Americans, but poor training and education on dark skin tones in the field of dermatology make it more likely to go undiagnosed than in white Americans, leading to a drastically lower survival rate. This alarming situation reflects the urgent need for diverse training datasets in AI.

Big Tech’s Troubling Bias

Apple, a giant in the tech industry, was sued in 2022 over allegations that the Apple Watch’s blood oxygen sensor was racially biased against those with darker skin tones. Despite acknowledging the potential difficulties of pulse oximetry measurements on darker skin, Apple claimed its device “automatically adjusts . . . to ensure adequate signal resolution across the range of human skin tones.” But does it?

Twitter, another tech titan, had to scrap its photo cropping feature in 2021 after it was found to favor white faces, a blatant display of racial bias in an algorithm meant to enhance the user experience.

Law Enforcement: Racial Bias by Design

The use of AI in law enforcement has come under fire due to its propensity to amplify racial bias. The root of the issue lies in the datasets used to train these systems, which overwhelmingly feature Black people. The New York Police Department, for instance, maintains a database of “gang-affiliated” people comprising 99% Black and Latino individuals. This disproportionate representation results in law enforcement agencies disproportionately arresting Black people, a disturbing trend highlighted by the Algorithmic Justice League.

Targeting Ethnic Groups: The Global Issue

This is not a problem confined to the United States. Israeli authorities reportedly use facial recognition software, known as Red Wolf, to track Palestinians and restrict their passage through key checkpoints. The system uses a color-coded rating to decide who can pass, fueling allegations of “automated apartheid.”

Meanwhile, China has faced backlash for using AI and facial recognition technology to racially profile the Uyghurs, a predominantly Muslim minority group. These examples highlight how the misuse of AI can have severe, real-world consequences for targeted ethnic groups.

The increasing pervasiveness of AI in our everyday lives is a testament to its potential. However, as this technology continues to evolve and influence various aspects of society, it’s essential to address its flaws and biases. AI can only be as impartial as the data we feed it, making it our collective responsibility to ensure that this data is diverse, balanced, and truly representative of the people it’s intended to serve.

The time has come to shine a spotlight on the role of AI in tech and its contribution to racial bias concerns. Addressing this issue requires a multipronged approach, involving all stakeholders – AI developers, ethicists, policy-makers, and users alike. It’s only through collaborative and concerted effort that we can hope to harness the full power of AI while minimising its potential for harm.